第5章线性回归算法

第5章线性回归算法

# Example01:利用PolynomialFeatures解决过拟合问题 (opens new window)

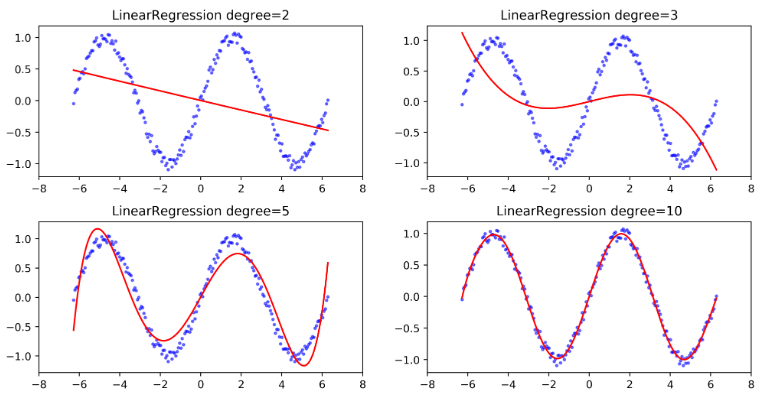

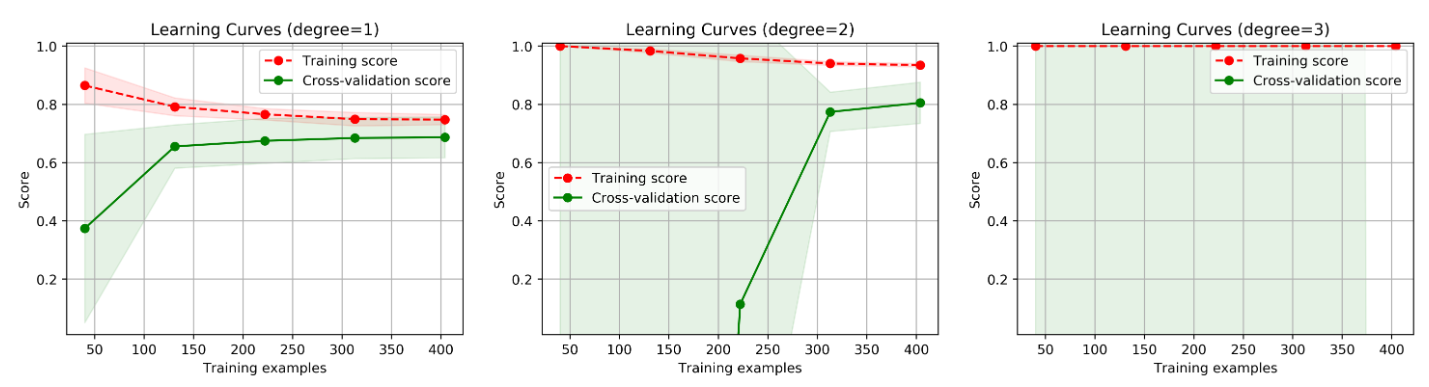

线性回归模型实在是太简单了,非常容易导致欠拟合,根据前文中解决欠拟合的手段一共有两个:

- 增加物理特征,如房屋的地段,房屋的朝向等

- 增加无意义的数学变量。使用

sklearn.preprocessing.PolynomialFeatures

from sklearn.linear_model import LinearRegression from sklearn.preprocessing import PolynomialFeatures from sklearn.pipeline import Pipeline def polynomial_model(degree=1): polynomial_features = PolynomialFeatures(degree=degree, include_bias=False) linear_regression = LinearRegression(normalize=True) pipeline = Pipeline([("polynomial_features", polynomial_features), ("linear_regression", linear_regression)]) return pipeline1

2

3

4

5

6

7

8

9

10

11注:若是Pipeline中的若自己编写的函数,则默认调用的是

.fit_transform方法,而最后的结点则必须要用.fit()实现。使用

mean_squared_error计算均方根误差from sklearn.metrics import mean_squared_error1

# Eample02:房价预测 (opens new window)

from sklearn import datasets

boston = datasets.load_boston(return_X_y=False)

X = boston.data

y = boston.target

# 或者直接元组接受

X, y = load_boston(return_X_y=True)

1

2

3

4

5

6

7

2

3

4

5

6

7

编辑 (opens new window)

上次更新: 2022/04/06, 15:04:00